Torch Sparse安装后libtorch Cuda Cu So出错解决方案 知乎

Mac E7 94 B5 E8 84 91 E7 89 88 E5 Ae 89 E8о 1.环境配置nvcc v显示为cuda compilation tools, release 10.1, v10.1.243。 nvidia smi显示为cuda 11.3。 使用以下命令安装针对cuda11.3 torch scatter torch sparse等包: pip install torch==1.11.0 cu113 tor…. I was using some custom cuda extensions with torch which had some custom setup installation with a setup.py. after upgrading torch and the cuda version, i couldn't import this extension with the error:\ "oserror: libtorch cuda.so: cannot open shared object file: no such file or directory.

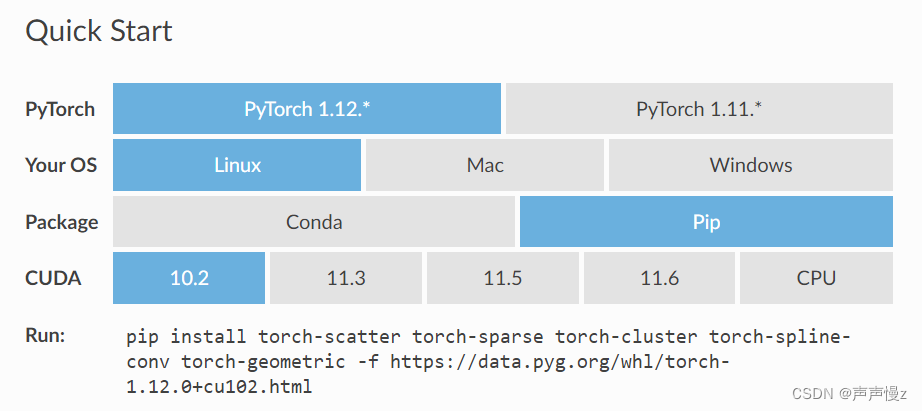

葡萄 271 Nft On Twitter Opside测试网第二轮节点白名单申请表开了 像这种确定空投的早期项目不要错过 又和cfx Pip install torch scatter torch sparse when running in a docker container without nvidia driver, pytorch needs to evaluate the compute capabilities and may fail. in this case, ensure that the compute capabilities are set via torch cuda arch list, e.g.: export torch cuda arch list="6.0 6.1 7.2 ptx 7.5 ptx" functions coalesce. Libtorch cuda.so is too large (>2gb) we use bazel as part of large monorepo to integrate with torch. in order to support large amounts of concurrent builds, we must execute our build actions remotely and this entails serializing the files needed for the build action. however, protobuf serialization has a hard limit of 2gb which makes the. Module: build build system issues module: cuda related to torch.cuda, and cuda support in general triaged this issue has been looked at a team member, and triaged and prioritized into an appropriate module. It is also referred to as fine grained structured sparsity or 2:4 structured sparsity. this sparse layout stores n elements out of every 2n elements, with n being determined by the width of the tensor’s data type (dtype). the most frequently used dtype is float16, where n=2, thus the term “2:4 structured sparsity.”.

ççtorch çü Scatter Importµùâõçöþüäúù úóÿversion ççcuda çü ççso çü Undefined Symbol Module: build build system issues module: cuda related to torch.cuda, and cuda support in general triaged this issue has been looked at a team member, and triaged and prioritized into an appropriate module. It is also referred to as fine grained structured sparsity or 2:4 structured sparsity. this sparse layout stores n elements out of every 2n elements, with n being determined by the width of the tensor’s data type (dtype). the most frequently used dtype is float16, where n=2, thus the term “2:4 structured sparsity.”. All included operations work on varying data types and are implemented both for cpu and gpu. to avoid the hazzle of creating torch.sparse coo tensor, this package defines operations on sparse tensors by simply passing index and value tensors as arguments (with same shapes as defined in pytorch). When i upgrade pytorch from 1.9 to 1.10 and pytorch geometric from 1.71. to 2.0.2, and i have also upgraded torch sparse, torch scatter, and torch sparse, but when i run import torch geometric, the.

Torch Sparseе иј еђћlibtorch Cuda Cu Soе єй и је іж жў зџґд ћ All included operations work on varying data types and are implemented both for cpu and gpu. to avoid the hazzle of creating torch.sparse coo tensor, this package defines operations on sparse tensors by simply passing index and value tensors as arguments (with same shapes as defined in pytorch). When i upgrade pytorch from 1.9 to 1.10 and pytorch geometric from 1.71. to 2.0.2, and i have also upgraded torch sparse, torch scatter, and torch sparse, but when i run import torch geometric, the.

Amphirioninaeрџђџ On Twitter Https Zh Wikipedia Org Wiki E5 8f B0 E7

Comments are closed.